The Windows Cache Manager (also referred to as System Cache) acts as a single system-wide cache that contains driver code, application code, data for both, user mode applications as well as driver data. While an application can make API calls in a manner that guarantees the application data bypasses this cache, there is no way for an application to guarantee that its data WILL be cached. Because the behavior of the cache depends upon a number of factors and is very often non repeatable, the application and system administrator can only increase the likelihood that the application data will be cached. In other words, executing the same program multiple times is very likely to result in slightly different cache behavior each time. This is part of the reason why applications such as Microsoft SQL and Microsoft Exchange bypass the System Cache.

To illustrate the complexities involved, consider the seemingly simple act of copying a file from one volume to another. Some, but not all, of these have been originally described in References 8 and 9.

- Either the source or the destination volume may be a local volume or a network volume

- The access speed for the source and destination volumes may either be the same, or one may be significantly slower than the other. Further, the access speed can change depending upon a variety of factors such as network load, system load in terms of other application execution, resource usage e.g. I/O may switch from being cached to non cached and vice versa.

- The optimum I/O size for the source and destination volumes may be either the same or significantly different

- If both the source and destination volumes are on a Windows system, then the System Cache is involved in both reads and writes

- The Windows team has spent a considerable amount of resources fine tuning the CopyFile and CopyFileEx APIs. Details are described in Reference 8, but the lesson to take away is the complexity of the issue and that further changes are probably forthcoming

Applications may

- Use the CopyFile or CopyFileEx APIs and utilize the system cache

- Use the CreateFile, ReadFile, WriteFile APIs and utilize the system cache for the source only, destination only, or both, or none.

Once you combine all of the various permutations and combinations offered by the above mentioned elements, the following situations can and do occur, when large files are being copied:

- The system cache on the computer hosting the source file gets filled to a large extent with data from the source file. At the very least, this will affect other programs executing on that system.

- The system cache on the computer system hosting the destination file gets filled with data for the destination file. This occurs fairly often since in the beginning, all of the destination file data is cached and thus writes appear to complete quickly. Once the destination file system cache hits a limit, disk writes (for flushing that cache) may occur slowly because the disk subsystem may be relatively slow

- To complicate matters further, even when the data is flushed from system cache, it may be cached inside the block storage device (storage array)

When suspecting problems that may involve the System Cache, an administrator can

- Inspect the application being used and switch to using a different application that explicitly does not use the System Cache. The Microsoft Server Performance Team Blog (Reference 7) explicitly suggests using Microsoft Exchange EseUtil as a file copy tool. The legal implications of using software shipped with Microsoft Exchange on a regular file server are beyond the scope of this document and best decided by your legal department

- Use some other means to affect the System Cache e.g. use some other application that will consume up the System Cache, but not otherwise unduly load the system.

- Attempt to administer the System Cache behavior utilizing in built utilities and/or registry keys

System caching can be controlled using administrative utilities and or a registry key.

To change the setting by editing the registry – as always beware of making registry changes and do so at your own risk – edit the registry key

HKLM\SYSTEM\CurrentControlSet\Control\Session Manager\Memory Management\LargeSystemCache

By default this DWORD is set to one (enabled) on Server SKUs and to zero (disabled) on desktop SKUs.

On Windows XP, Microsoft provides a GUI to make the same changes which is preferable to making these changes via registry edits. Figure 2 shows the GUI that results from launching the Control Panel System Applet and then clicking the Advanced Tab

Figure 2 Windows XP Control Panel System Applet Advanced Tab

Figure 3 shows the resulting System Cache size adjustment GUI when the advanced tab is clicked in Figure 2 on a Windows XP system

Figure 3 Windows XP Control Panel System Applet Advanced Tab to adjust System Cache Size

Note that this GUI to change the System Cache size has been removed in Windows Vista.

Windows Server 2003, Windows Vista, and Windows Server 2008 Block Storage Cache Administration

Recall the earlier explanation of the bug in previous versions of Windows that ignored application requests to ensure data/metadata got committed to storage media and the subsequent fix made in Windows Server 2000 SP3 and also Windows XP SP2. To allow system administrators an informed choice, Microsoft made available a cache administration utility called DskCache.exe. This utility was only available by calling Microsoft PSS and could be obtained without incurring any monetary charge. To make it very clear that the DskCache utility should only be used in rare circumstances, Microsoft labeled it the “Power Protected Write Cache” and shipped it natively with Windows Server 2003 and higher versions of Windows. The new utility name emphasizes that it should be used only when the administrator is sure that the disk storage cache has a battery backup to ensure data integrity.

For Windows Server 2003 and higher versions of Windows, Microsoft has provided the equivalent of the DskCache.exe tool built into Windows. To use this feature:

- Start Device Manager

- Select the drive for which you wish to administer the caching policy

- Select Properties

- Click on Policies tab

- Look for the option “Enable write caching on the disk” and make sure it is selected

- And just below that, look for an option “Enable advanced performance”. This option favors throughput/speed at the potential risk of data corruption.

The resulting GUI from following these steps is shown in Figure 4.

Figure 4 – Windows Server 2003, Windows Vista & Windows Server 2008 disk caching policy administration

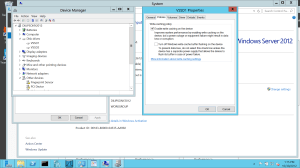

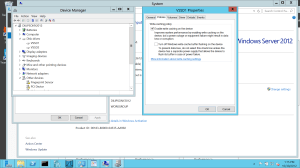

For Windows Server 2012, here is what the disk caching policy GUI looks like

Figure 5 – Windows Server 2012 disk caching policy administration

Conclusion

This article described means by which application programmers can

- Ensure that their file level data does not get cached in the Windows System Cache

- Ensure that their file data does not get cached in the block storage layer and does get committed to storage media, given the correct hardware

- Attempt to ensure, with no guarantee of success, that their file data does indeed get cached in the Windows System Cache

This article also describes means by which system administrators can attempt to ensure that data gets committed to storage media and does not get cached at either the System Cache or any block storage cache.

References

- Microsoft KB 241374 (http://support.microsoft.com/kb/241374/EN-US/) : Read and Write Access Required for SCSI Pass Through Request

- Microsoft KB 8373314: About Cache Manager in Windows Server 2003

- Microsoft KB 332023 Slow Disk Performance When Write Caching Is Disabled

- Nuances of Windows NT and SCSI disk performance article by Dilip Naik

- Force Unit Access Proposal

- Microsoft KB 870894 You receive a “Delayed Write Failed” error message in Windows XP Service Pack 2 or Windows XP Tablet PC Edition 2005

- Slow Large File Copy Issues – Microsoft Server Technical Support Performance Team Blog http://blogs.technet.com/askperf/archive/2007/05/08/slow-large-file-copy-issues.aspx

- Inside Vista SP1 File Copy Improvements – Mark Russinovich Blog http://blogs.technet.com/markrussinovich/

- Server Generates Delayed Errors Copying Very Large Files http://www.eggheadcafe.com/software/aspnet/32252624/server-generates-delayed.aspx

- Microsoft KB 920739 http://support.microsoft.com/kb/920739 Decreased Performance when copying files larger than 500 MB

- Serial ATA Program Revision 1.2 http://www.sata-io.org/documents/Interop_UnifiedTest_Rev1_2_v10_091707_000.pdf

- Disks, Lies, and damn disks http://perspectives.mvdirona.com/2008/04/17/DisksLiesAndDamnDisks.aspx

- Serial ATA in the Microsoft operating system environment http://www.microsoft.com/whdc/device/storage/serialATA_FAQ.mspx

- Enforcing Database Recoverability on Disks that lack Write-Through ftp://ftp.research.microsoft.com/pub/tr/TR-2008-36.pdf