Microsoft is investing heavily in the Network Attached Storage (NAS) protocol SMB 3 and is clearly laying out a road map that suggests NAS is the future as opposed to Direct Attached Storage (DAS). Consider:

- SQL Server 2012 system d/b and user d/bs, as well as Hyper-V 2012 workloads can be placed on NAS provided the NAS is SMB 3!

- Microsoft made significant speed improvements in the SMB 3 client and server to have NAS achieve 97% of the speed of DAS, and this is without hardware acceleration.

- Microsoft invested in SMB 3 Multi Channel by aggregating the bandwidth using parallel TCP channels using multiple NICs at the SMB 3 protocol layer. Multi Channel is all about speed AND reliability where failed I/Os are seamlessly moved to a different TCP channel when one channel fails.

- Continuing on the speed theme, Microsoft invested in RDMA support via SMB Direct, which requires not just SMB 3, but also SMB 3 Multi Channel. The maximum IOPS on a Windows system is achieved when using SMB 3 NAS with SMB Direct support, NOT with DAS!

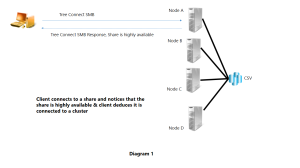

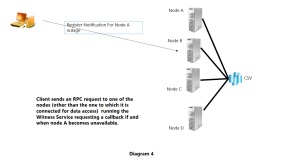

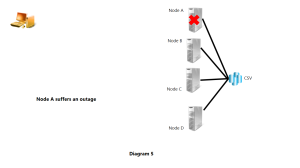

- Going back to the reliability theme, SMB 3 includes support for Persistent Handles, which combined with the Witness Protocol, ensure applications such as SQL, Exchange, and Hyper-V never see an I/O failure, and the I/O is seamlessly moved to a different node as needed. This only works with SMB 3 NAS, and does NOT work with DAS!

- I have been asked numerous times “But Microsoft has invested in Storage Spaces and Tiering where data is moved between SSD and spinning media to optimize performance. Does that not indicate Microsoft advocates DAS?” And my answer has always been “Storage Spaces is even more valuable when used as the storage backing a Windows Server 2012/R2 NAS!” Using Storage Spaces does not mean one has to abandon NAS.

- Microsoft supports deduplication of VDI VMs, but the only supported configuration is with the VDI VM files residing on an SMB 3 based Windows Server 2012 R2 based NAS! (and not with DAS!)

- To provide examples of other Microsoft efforts leveraging SMB3 , consider the simple “copy” or “xcopy” command to say copy a GBs large file. Microsoft changed the CopyFileEx API to leverage all SMB 3 features including SMB 3 credits, SMB 3 Multi Channel, and SMB Direct (RDMA) to ensure the file copy is as fast as possible.

- The Microsoft Hyper-V team re-wrote live migration in Hyper-V 2012 R2 to leverage SMB 3. While migrating a VM, Hyper-V 2012 setup its own TCP channel to copy the VM RAM. Hyper-V 2012 R2 uses SMB 3, and thereby gets the speed/reliability improvements of SMB 3 while doing the same copy.